Superman, X-rays, and Extramission theories of vision.

The Man of Steel's visual capabilities make contact with folk theories of vision.

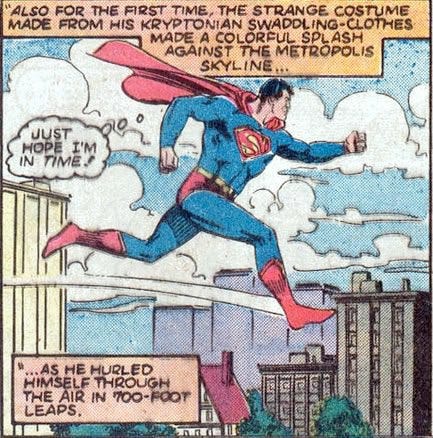

Superman. The man, the myth, the legend. Faster than a speeding bullet. More powerful than a locomotive. Able to leap tall buildings in a single bound because they didn’t go all the way for “he can fly” right away.

If you’re thinking about how the world looks different to various characters in the wide realm of popular media, Superman looms fairly large. I have to admit that in some ways, he’s such a conspicuous character to talk about in this context that I’m worried it’s a little boring to ring the changes on Kryptonian vision. I’m far from the first person to think about various aspects of Superman’s sensory abilities after all (you’ll see a number of references to point you towards other writes who have paved the way here) and in some ways Superman’s super-abilities have always struck me as well…monotonous. Everything about him is just sort of super. Why? How? Somethingsomething red sun of Krypton vs. yellow sun on Earth and so he has basically all of the powers you could wish for (once they decided that flying was cooler than jumping). What’s left to say? I think maybe quite a few interesting things as it turns out, all of which are concerned with the primary visual ability that we get to see Superman use in nearly all of his various incarnations: X-ray vision!

Still frame from Smallville. Fair use.

That’s right - as I’m sure you know, Superman can see through things when he wants to. There are limits to his powers (lead turns out to be impenetrable, for example) and there has been a good bit of variability to what he gets out of these views across media. Sometimes he sees skeletal figures like the figure above, but in some cases he sees some truly gruesome anatomical views of muscles and fascia. At other times, particularly in the older comics pages, it’s a lot more like the kind of thing that novelty “X-ray specs” used to promise: Basically, you get to see what you’re interested in, physics be damned! This variability aside, it’s clear that Superman has access to some part of the electromagnetic spectrum we don’t get to see with our all-too-human eyes, which raises some neat questions about how his visual system supports that. In particular, compared to some other sci-fi and speculative fiction characters, Superman’s X-ray vision is neat because it’s a sort of ancillary form of vision that apparently coexists alongside a visual system that allows him to see visible light the same way we do. How does that work? Specifically, how do you put together a visual system that gives you options for seeing visible light and X-rays?

There are a bunch of things I’m going to gloss over here in the interests of keeping our discussion focused. For example, I’m not going to worry about how he manages to sort of select whether he’s getting an X-ray view or a visible light view, or how it seems to work like a sort of X-ray spotlight sometimes. Those things we’re going to say are just fine and we won’t dwell on them further. For the first part of our discussion as well, I’m going to assume that there are ambient x-rays around for Superman to see, which is itself quite the assumption. The X-rays you’re familiar with in a medical or dental setting depend on shadowcasting X-rays onto a photosensitive medium and relying on the fact that they will go through some things (like soft tissue) but not through others (bone), leaving an impression of deep structures on the imaging medium. Superman doesn’t need to position the things he wants to see between an X-ray source and his eye, so we have to assume this means that objects are somehow illuminated by enough X-rays for him to see.

It’s fine.

Even with these issues safely solved, there are a couple of challenges for Superman’s X-ray vision to overcome that I think provide a nice opportunity to talk about how some of the first stages of seeing in your visual system work. To understand the nature of these challenges, we’ll start by talking about image formation in the human eye.

How to stop worrying and see x-rays (if they’re around).

X-rays are somewhere over the rainbow - trouble with refraction

If Superman is hoping to use his ability to detect X-rays to see things, his visual system should be capable of not just transducing light in that part of the spectrum, but should also form x-ray images in his eye that preserve the spatial organization of x-ray light as much as possible. What I’m really saying is that being able to detect x-rays with his retina is only going to be so useful if he’s stuck with an incredibly blurry image. After all, x-ray images are typically useful not just because they tell us that there are x-rays in the environment, but because we can distinguish between nearby parts of the image that did and did not have a lot of x-ray content. If you took a regular x-ray image like the ones you see at your doctor or dentist’s office and smeared the bright and dark parts of the image around, you’d have a very hard time seeing where there was a break in one of your bones or a cavity in one of your teeth. The mechanisms that support image formation in your eye are the first constraints on the quality of that image and it turns out that x-rays pose a fairly serious problem.

First, let’s talk about how the different parts of your eye contribute to image formation: How do patterns of light in the world become patterns of light in your eye? To understand how this works, we’ll need to know some principles of geometrical optics. This is a way of thinking about what light does that is based on imagining that light is best described in terms of rays. I think this is a fairly intuitive way for most people to think about light because the model you should have in your head in this case is basically an arrow - think of light as a thin beam that sort of shoots through the air (or whatever medium we’re talking about, which will matter soon!). Besides the assumption that light is a ray, we also assume a few other things about what light can do in this framework: (1) Light will travel in a straight line through any medium. (2) Light can be absorbed (soaked up) by some materials and will reflect (bounce off of) others. (3) Light can bend when it passes from one medium to another.

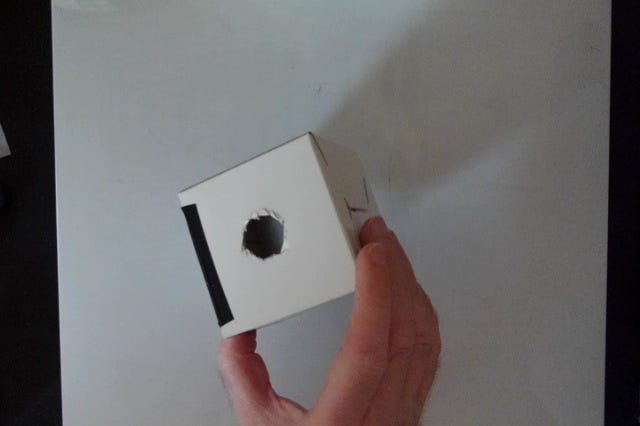

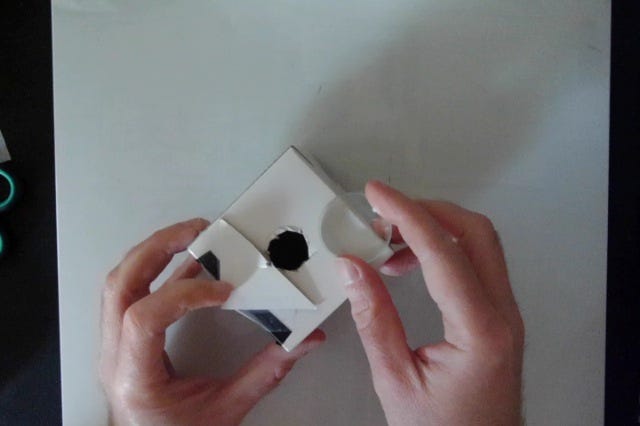

With these ideas in mind, a good way to start learning about image formation in the eye is to build your own model eyeball. Specifically, let’s use a simple optical device called a pinhole camera to investigate how patterns of light can be formed in your eye and what we need to do to preserve the spatial arrangement of light as much as possible so we can see differences between nearby parts of an image reasonably well. We can start with a small cardboard box, which is going to be our stand-in for the eye itself. We need to do two things to start playing with image formation in this model system - First, we need to poke a hole in the front of it to let some light inside. Next, we should give ourselves a way to observe that light as it arrives inside the box. The first part is easily achieved by poking a pencil-width hole in one of the sides of the box. The second part requires a little more papercraft skill, but all we need to do is cut out most of the side opposite our pinhole and replace the cardboard with some tracing paper. This gives us a screen of sorts that we can use to look at our patterns of light after they come into the box. What we’ve really done in these two steps is approximate two parts of the eye, namely the pupil and the retina. Already, we can use this model eye to see how images are formed inside the eye - hold the pinhole towards some bright light source and look for patterns of light on the screen. You may find that this is fairly tricky because that small pinhole lets in very little light, but if you can point it towards something bright and make the screen fairly dark, you should be able to see some things.

Ok, BUT - an incredibly dim image is no fun at all. Let’s enlarge the pinhole of our camera to let more light in, which should make the image easier to see. Indeed, if you make that pencil-width hole into something more like a finger-width hole, you’ll see a much brighter image. This should make it easier to notice the inverted nature of the retinal image, which is a simple consequence of how light from an object gets from the outside world, through the pinhole and onto the back of the screen. Those light rays from the top of an object have to pass through our pinhole in a straight line, so they end up aimed towards the bottom of our model retina (vice-versa for light rays coming from the bottom of the object). Let’s say you’re encouraged by this brighter image and decide to really go for it and make that pinhole more like the size of a dime or a nickel. This should be great, right? Tons of light and hopefully a much brighter image should follow. Indeed it does, but at no small cost: The resulting image is likely to be very bright, but also very blurry.

What’s going on? The issue here is another consequence of geometrical optics, but one that requires understanding something about how light is being reflected from objects and surfaces in the world. Any part of the object that has light bouncing off of it ends up reflecting that light in many directions. It’s almost like every part of the object is a new light source that radiates reflected light in all directions (though the nature of the material determines how much it’s really all directions, but I digress). What this means is that the larger pinhole you made gives light rays coming from the same part of the object different paths to get through the hole and onto the screen. Some of them will pass through the top part of the hole and end up on one part of the screen, while others will pass through the bottom part of the hole and end up in a different spot. This is happening for every part of the object, so the end result is a smeared out, blurry image that is the sum of many copies of the image all being projected onto your model retina in slightly shifted locations.

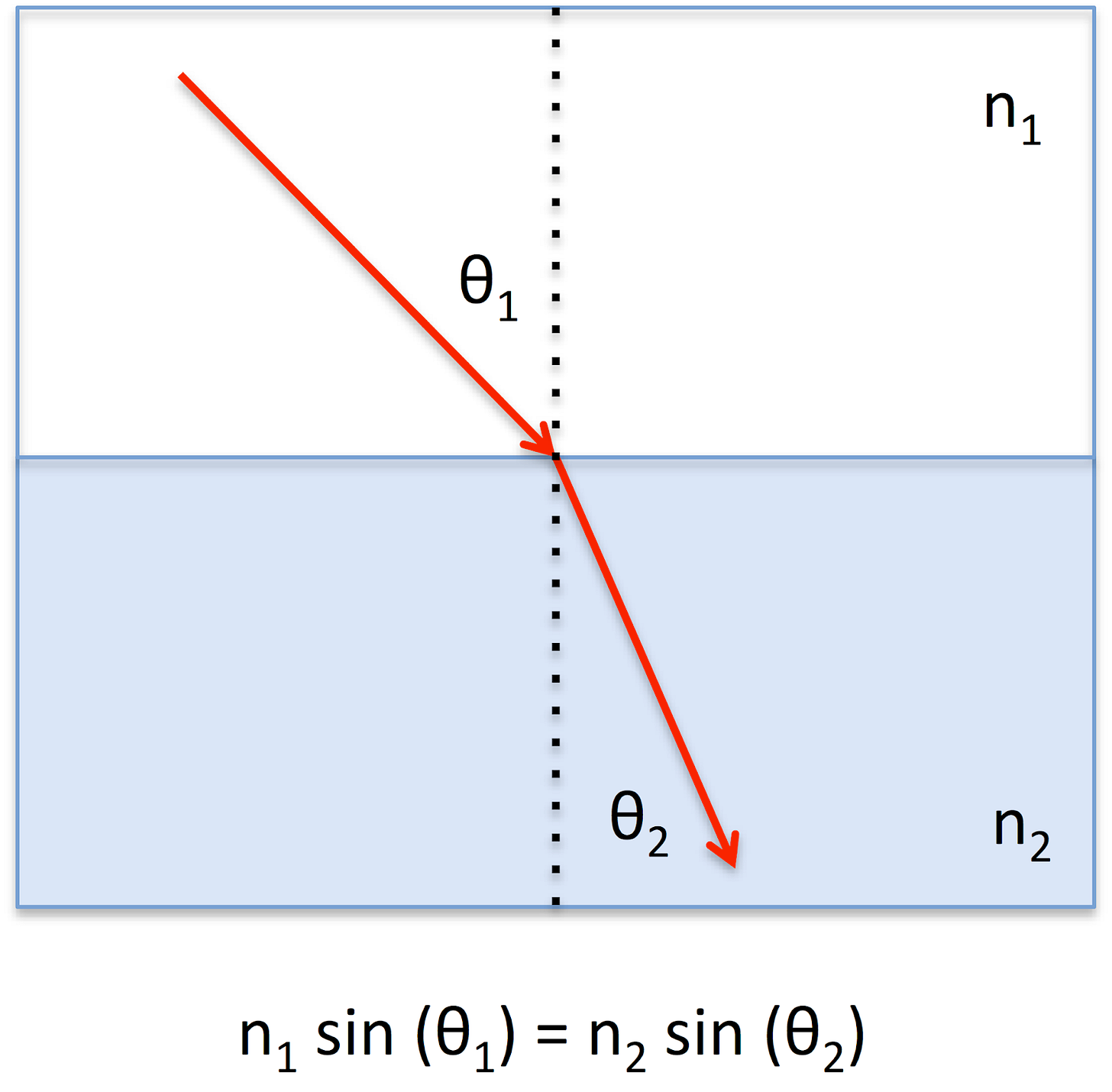

What to do about this? To address this issue, we’ll add one more element to our model system that will improve things a lot - a lens. Remember that one of our assumptions about light in geometrical optics is that light can change direction or refract as it passes from one medium to another. If you’ve ever seen a straw look “broken” while it’s sitting in your glass of water, you’ve seen the consequences of this bending. We can use this property of light to introduce a small mechanism for organizing the different copies of our image that are being allowed through our larger pinhole. See, what we’d like to do is make it so that all those light rays coming from the same part of the object end up at the same part of the screen instead of slightly shifted locations. To do that, we need to bend some of the rays a little bit and some of the rays by rather a lot, depending on where they’re coming into the pinhole. There are basically two ways to mess with how much light will bend during refraction: You can change the medium it’s passing through, or you can change the direction it’s hitting the new medium from. The way both of those factors affect refraction is captured by an equation called Snell’s Law, which describes how the direction light will take on the other side of an interface between two media depends on what the two media are made of and the direction of the incoming light relative to the surface between the two materials.

DrBob at the English-language Wikipedia, CC BY-SA 3.0 <http://creativecommons.org/licenses/by-sa/3.0/>, via Wikimedia Commons

The bottom line is that there is a shape that turns out to deliver most of what we want for good image formation in the human eye: A convex lens. The way that the curvature of such a lens varies over the surface of the pinhole turns out to be something we can choose so that each copy of the incoming pattern of light from an object is bent by the right amount and ends up in the same place on our model retina. The result is an image that is bright and much clearer, which we can see if we drop a small acrylic lens in front of our model eyeball’s pinhole. That small acrylic lens I’m putting on my pinhole camera in the image below is standing in for your own eye’s cornea, which is a bit of tissue that sits in front of your pupil and does most of the bending of light rays to support clear image formation in your eye. You also have a crystalline lens that you can adjust the curvature of to do a little more refraction work, but we’ll leave this out of our discussion for now. The main point is that getting clear images depends on this process of bending light by the right amount. What does all this mean for Superman’s X-ray vision?

To consider how image formation might work for x-ray vision, I need to tell you one more thing about refraction that I didn’t mention up above: Refraction doesn’t just depend on the direction of incoming light at the interface between two media and the materials that those media are made from. It also depends on the wavelength of the light - those n’s in the equation above stand for the refractive index of the material, which turns out to be related (inversely proportional if we want to get technical) to the wavelength of light.

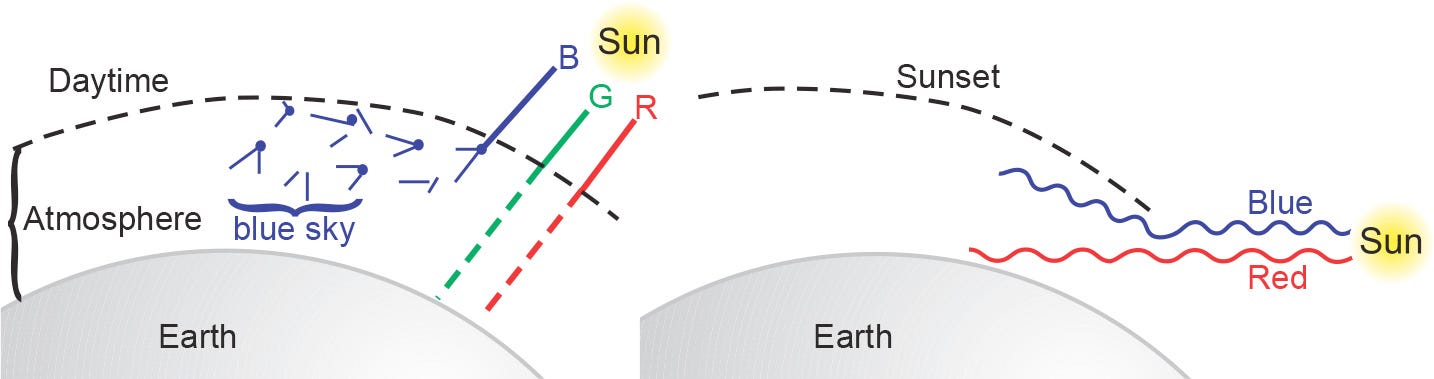

The point here for us is not the change in the equation though, but what that change means for the behavior of light in an eyeball with a lens. Specifically, it means that light with different wavelengths will bend by different amounts when it passes through a lens. If you happen to have some laser pointers lying around and large enough acrylic lenses, you can see this by measuring where horizontal red, green, and blue light rays converge when they pass through a vertically-oriented lens. It’s also something that’s evident in cheap telescope and binocular lenses that suffer from what’s called chromatic aberration. The different bending of light with different wavelengths leads to offset images of the same object that are different colors, which leads to multi-color fringes at the edges of shapes. Speaking of multi-color fringes, you may not have known this fact about refraction vis-a-vis Snell’s Law, but you very likely knew about it from seeing a rainbow: Those distinct bands of color are the result of white light composed of many different wavelengths of light being spread out by refraction, placing light with a different wavelength (and thus a different color to your visual system) in a different place.

By DrBob at the English-language Wikipedia, CC BY-SA 3.0, https://commons.wikimedia.org/w/index.php?curid=46592651; By Stan Zurek - Own work, CC BY-SA 3.0, https://commons.wikimedia.org/w/index.php?curid=923955

What this means in terms of your eye and image formation is that the problem is a little bit trickier than I made it out to be. Getting a nice clear image that retains the spatial pattern of light from the outside world inside your eye and on your retina is a matter of bending light that’s passing into your eye from different directions AND with different wavelengths. As it turns out, your eye doesn’t actually do very much to deal with the different refraction of short and long-wavelength light, so you’re just kind of stuck with the fact that short-wavelength light (bluish stuff) is generally not in focus if long-wavelength light (reddish stuff) is in focus. That difference between red and blue light contributes to chromostereopsis, which is one of my favorite visual effects. Because red and blue light are focused at different distances, a simple figure made with red and blue color in the foreground/background may look like it has depth in it. Take a look at the image below, for example: Does the foreground shape look like it’s sort of floating either in front of or behind the background? If so, you’re experiencing a neat consequence of how your visual system uses differences in blurriness as a cue to the relative depth of objects in a scene. Because the red light and the blue light aren’t ever both in focus, that’s a clue that one or the other may be closer to you, and that clue can lead to a subjective experience of depth.

Fred the OysteriThe source code of this SVG is valid. This vector image was created with Adobe Illustrator., CC0, via Wikimedia Commons

So what about Superman and his X-rays? All this means that he’s got quite a problem if he’s trying to get clear images of X-rays: As Orzel (2016) points out in his article about the optics of Superman’s X-ray vision, materials like glass, acrylic, or the tissue of your cornea aren’t even going to refract X-rays the way they bend visible light at all. Even if they did somehow, the wavelength-dependency of refraction means short wavelengths bend more than long wavelengths and X-rays have a wavelength that is a good bit shorter than any visible light! Superman’s cornea, crystalline lens, and eyeball are thus going to be ill-suited to focusing both visible light and X-rays, which means one or the other is likely to be extremely blurry. The troubles with X-ray vision thus start right at the pupil and the cornea as we try to form good images. Things also only get trickier the further we go into the eye.

Packing X-ray detectors into the retina

Let’s say that the troubles with image formation using X-rays are rendered irrelevant by the fact that Superman turns out to be super. The last son of Krypton can bend steel with his bare hands after all, so why can’t he just bend his eyeball or something so that the X-rays come into focus? I mean, the guy turns out to be able to do all kinds of stuff that isn’t limited to leaping tall buildings in a single bound and whatnot. Hell, you get Red Kryptonite involved and the sky’s the limit.

Anyway.

If we decide to overlook the troubles with X-ray image formation in the eye, this would move us on to the next step in pattern vision, which is the transduction of light by the retina. Light in the environment becomes electrical signals in your nervous system via specialized cells called photoreceptors, specifically two classes of cells that you may have heard of before: The rods and the cones. If you’d like to hear a little more about how the rods and cones contribute to your ability to see color (and the color discrimination abilities of other fictional beings), you might want to check out my discussion of the Predator’s color vision (Balas, 2022). For our discussion about Superman and X-ray vision, we don’t need quite as much detail, but we do need one key fact: Your different photoreceptors, in particular the cones, vary in their sensitivity to different wavelengths of light. Some of your cones absorb long-wavelength light (which would look reddish to you) very well, while others absorb short-wavelength light (which would look bluish to you) particularly well. For Superman to be able to see X-rays, he’ll have to have some photoreceptors that will absorb these especially short wavelengths, too. This isn’t such a problem as it turns out - plenty of other species have more cone subclasses than we do, so it isn’t such a big deal for Superman to have a 4th class of cone hanging out somewhere in his retina. Not a big deal, that is, except for one thing: Where exactly should he put them? That is, how do we arrange the cones that he’ll use to see the colors you and I can see in the visible spectrum and this other X-ray cone so that he can see both kinds of light? Now that you mention it, how are those three cone sub-classes that you (probably) have arranged on your retina? We can take a look at this in the figures below.

By Mark Fairchild, CC BY-SA 3.0, https://commons.wikimedia.org/w/index.php?curid=32075732

In the figure above, the cones are color-coded according to the wavelength of light that they absorb best: Long-wavelength cones appear red, Medium-wavelength cones appear green, and short-wavelength cones appear in blue. At first, this might look a little like it’s just a sort of smushed-together grid of all three colors, but if you look a little more closely, you’ll see that there are some interesting things going on here. First of all, you might notice that the red and green dots outnumber the blue ones by quite a bit. Why does your retina have so many more long- and medium-wavelength cones compared to the short-wavelength ones? It also looks like those blue dots tend to be pretty far apart from other blue dots, but the red and green dots all cozy up right next to neighbors of the same color. Finally, take a look at the central part of the picture - see any blue dots? There is definitely something up with short-wavelength cones here: They’re fewer in number, they’re spaced far apart, and they’re completely absent from the central part of the retina. To understand what’s going on here, we need to say a little more about different kinds of light as well as how your visual system supports your ability to see detail in patterns of light.

The first thing we should talk about is that there’s one more thing that light does that (1) depends on wavelength and (2) affects a number of things about the way things look to you. Light will scatter as it passes through a medium in which there are small particles. The atmosphere, for example, has all sorts of molecules that sunlight can bounce off of on its way from the sun to your eye. The small size of those molecules leads to a type of scattering called Rayleigh scattering, which depends on the wavelength of light such that shorter wavelengths will scatter more. This is the basis for two phenomena you’ve probably noticed in your everyday experience - the blue color of the sky and the reddish color of the rising/setting sun. If you imagine that sunlight (which is composed of light across the visible spectrum) is passing through the atmosphere on its way to your eye and scatters, or bounces, off of atoms and molecules along the way, the fact that the shorter-wavelength bluish light will scatter more means that this is another way in which colors are being distributed differently in the environment. The blue light will be scattering like mad, while the greenish and reddish light will make its way through the atmosphere with comparative directness, with the consequence that the blue light will end up looking to you like it is arriving from all directions, because it is! Therefore, the sky is blue. What about that reddish sun at sunrise and sunset? It’s the same story, but now we have to apply it to the light that’s coming directly from the sun to our eye as we look at it moving under the horizon. That view of the sun as it approaches the ground plane places a good bit more atmosphere between us and the incoming light, which means more opportunities for short-wavelength light to scatter. Again, the reddish light will scatter less between the sun and our eye, leaving our incoming light rays biased towards the unscattered long-wavelength end of the spectrum due to the scattering of the short-wavelength light away from the path to our eye. And there you have it, scattering and its dependence on wavelength for small particles explains some optical phenomena and starts to give us a handle on what’s up with short-wavelength cones in the retina.

Image adapted from https://ltb.itc.utwente.nl/509/concept/89052

One way to think about this issue of blue light scattering more than reddish or greenish light is to say that blue light is a problem in the environment before we even start thinking about image formation and pattern vision in the retina. Seeing detail in a pattern of light depends on retaining the spatial organization of light during image formation, but it turns out that blue light may already be spatially disorganized by scattering before it arrives at the pupil! What are we supposed to do about that? In a word: Nothing. We can’t unscatter light from the environment with optical trickery in the eye, so we’re better off just sort of living with the fact. A better way to put this is to say that a biological investment in measuring fine details of blue light is unlikely to be worth it, so strategies for pattern vision that don’t support such processing will probably be favored. What do I mean by an investment in measuring fine details? What I mean is really two things: (1) Stuffing in as many photoreceptors as you can - if you think of these cells as the pixels in the image you are measuring, more cells means your image is less blocky and more detailed. (2) Having photoreceptors of the same type close to one another. While the first point probably isn’t too hard to grasp, that second one turns out to just be something you need to know about the physiology of your visual system. High acuity depends on comparisons between neighboring cones of the same type (Snowden, Thompson & Troscianko, 2006), so having a long-wavelength cone right next to a medium-wavelength cone isn’t helping your ability to see detail very much.

Here’s how all this intersects with the issue posed by the scattering of blue light. Because blue light is likely to have been scattered a good bit in the environment, a retina with lots of short wavelength-cones packed next to each other isn’t much worth it. Instead, go ahead and let those cones be spaced out, and put the cones most sensitive to light that won’t scatter so much be packed in close to their buddies. While you’re at it, if you’re planning to pack photoreceptors together very tightly in any part of your retina, you may as well leave out the short-wavelength cones completely. The point of that tight packing is to get the highest acuity you can after all, and high acuity isn’t a meaningful idea when light scatters a ton! This logic leads us to the layout of the human retina: a small number of more spaced-apart short-wavelength cones, with a central portion where they are entirely absent.

Alright, what about a Super-retina that can sense X-rays? It will need its own photoreceptor sub-class, but we’ve got to add it into this mosaic of cells somehow - where does it go? Anywhere we start dropping in X-ray photoreceptors is going to start compromising the acuity that’s achievable with the other cones. That is, we can’t make room for new X-ray cones without pushing apart some of the neighboring visible light cones that were helping achieve high acuity for visible light in their original arrangement. The more we want to pack X-ray cones close together, the worse it’s likely to get for the acuity of visible light. But wait a minute- didn’t we say that light with a short wavelength tends to scatter more? X-rays have an even shorter wavelength than blue light, so packing those photoreceptors together won’t even matter that much. X-ray scatter can easily compromise radiological images after all (see below), so it’s not too hard to imagine that the problem would translate to ambient X-rays Superman would try to measure with his Super-retina. The bottom line is that trying to cram an X-ray photoreceptor onto the Man of Steel’s retina is going to be a tough task if we want him to be capable of seeing fine detail with either slice of the EM spectrum.

Unfortunately, all of this seems like it means that X-ray vision is kind of a non-starter, at least in terms of the mechanisms we know our visual system employs for sensing visible light too. This is part of why I actually love a paper written by Ben Tippet (2009) in which he offers a unified theory of how Superman’s powers work: His idea is that what Superman can really do is change the inertia of pretty much anything he wants to. This gets him flight, it gets him invulnerability to bullets and such, AND it means he doesn’t need to actually sense X-rays! Instead, he can nudge light around the electromagnetic spectrum at will so that he converts electromagnetic energy into X-rays to penetrate things he wants to see through, but then converts it back to visible light when it gets to his retina. No need for an extra photoreceptor, no need to refract really short-wavelength light - done and done. It’s a neat idea (though obviously beyond the scope of our discussion of human vision), and I will always be glad that there are more people in the world thinking about things like this.

Extramission (or maybe X-tramission?) Vision

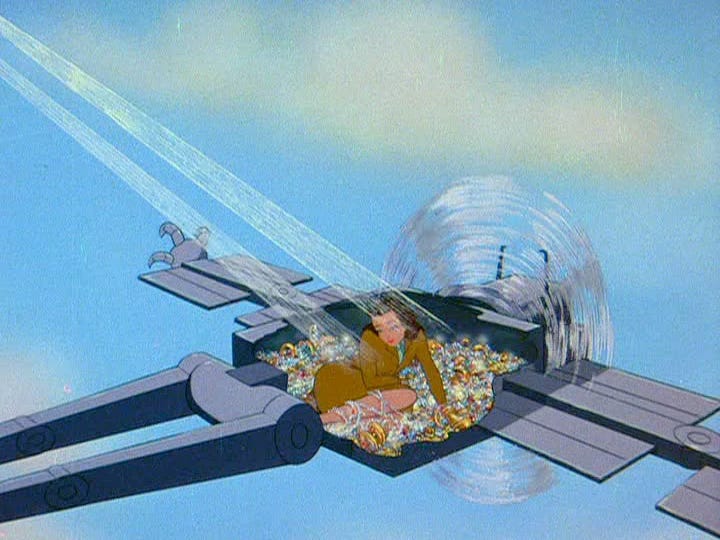

Besides the proposal that Superman does some intense work to change the inertia of light, all of my previous discussion about the optics of X-rays and the structure of the retina is missing a very important point. All of this presupposes that what Superman’s visual system is doing is transducing X-rays the same way that you and I transduce visible light from the environment. But as decades of comics, movies and TV shows have shown us, Superman’s X-ray vision doesn’t seem to have much to do with sensing ambient X-rays from the environment. The atmosphere is sufficiently thick that there aren’t too many of these making it to the Earth’s surface from the Sun anyway and while things like radon gas and other radioactive sources do produce terrestrial X-rays, that’s likely not enough to give Supes reliable opportunities to peer inside things at will. No, it’s pretty clear from lots of depictions of Superman’s X-ray vision that whatever is happening here doesn’t have much to do with light in the environment, but relies instead on whatever the heck he’s able to shoot out of his eyes.

Movie still from 1940’s era Superman short. Fair use.

The thing is, I can’t do much to relate these beams to anything in your visual system because you don’t shoot anything out of your eyes to see. If you’re interested in some compelling discussions of the possible physics of these beams, how our sun makes it possible for Superman to do this, etc. those are out there on the wide, wide plains of the internet, but it’s not what I’m going to talk about. Instead, I’m going to take this opportunity to talk about something that I think is a fascinating corner of the vision science literature: The folk belief that you DO shoot something out of your eyes to see, or more formally, extramission theories of vision.

Extramission vision: An idea with a long history

Maybe it sounds a little bit strange, but if you’re going to try and understand how vision works, you should start by deciding whether stuff goes into the eye when you see or if stuff shoots out of the eye instead. As Gorini (Gorini, 2003) describes, Pre-Socratic philosophers (Ptolemy, for one, who also got the solar system wrong) favored the extramission theory that vision proceeds due to rays that emanate from the eye. Euclid in particular described the geometry of seeing using light rays that emanated from the eye - a mathematical treatment that got a ton of things right but suffered from an unfortunate sign error on the arrows. The thing is that vision feels directed! You point your eyes at stuff to see things after all, so surely that indicates something like the “fire in the eye” that Empedocles wrote about. Later philosophers including Plato and…I’m sorry for this, Aristotle…favored at least some inclusion of an intromission theory whereby rays entered the eye to enable seeing, but didn’t clearly reject extramission’s possible role in seeing.

The real hero of this story is Hasan Ibn Al-Haytham. Al-Haytham was an Arab scholar who is responsible for a number of important breakthroughs in understanding the visual system, including the first real attempt to synthesize everything known about the mathematics of light, the physiology of the eye, and the experience of seeing in his Kitab al-Manazir (or Book of Optics). Al-Haytham was the first to describe seeing as the result of light that emanated from all points of an object into the eye and also identified the brain as the ultimate substrate for visual processing. There were shortcomings to his theory for sure - in particular, he struggled to explain how the light radiating in all directions from each point on an object ended up at one point on the retina. Still, in terms of a clear presentation of how vision might work based solely on intromission rather than extramission, his work is the starting point. As someone who has worked in vision science for some time, I have to take a second to tell you that you really ought to go find out more about Al-Haytham. He was a brilliant and fascinating person and to this day I still live in mild-to-moderate fear that any topic I’m currently working on may have been more or less explained perfectly by him centuries ago.

So there you have it, right? Sure, there were some early missteps during that whole eyebeams-shoot-out vs. lightbeams-shoot-in argument, but we worked it out.

Didn’t we?

Extramission vision: A sticky idea

While formal theories of vision have been based on intromission for a long time, it turns out that the popular understanding of vision is more complicated. Extramission theories of vision turn out to be part of the conceptual toolkit that both children and adults use as the basis for their intuitive understanding of the world. Winer & Cottrell (1996) point out that it isn’t unusual at all for young children to be a bit miscalibrated about a number of basic scientific facts - most young kids turn out to be flat-Earthers for example, but most of them (most) will come around (see what I did there?) to the fact that the Earth is round by adulthood. Adults are also likely to reason incorrectly about a number of physical phenomena, particularly with regard to predictions about bodies in motion. Take one of my favorite examples of this kind of error: Imagine you’re re-enacting the best scene from Return of the Jedi by swinging a rock above your head in a sling, hoping to send it flying at your big brother. If he’s standing right in front of you, where should the rock be in its circular motion when you let go? Many adults will tell you that you should let go when it’s right in front of the target, with the idea being that the rock will shoot straight forward from there. Another big mode in the data is that many people think that the rock will sort of keep curving a bit after you let it go, so you need to anticipate that bending of its path after release and let it go when it’s more or less right at your side. Neither of these answers is right, though. To get it right (and importantly, to hit your brother with the rock) you need to know that when released, the rock is going to continue in a straight line that is tangent to the circle you were swinging it around in. This means that you have to let go of it well before it gets to that point directly in front of you, but after it passes the point directly to your side. The issue here is that there are answers that just sort of feel right, but aren’t supported by the mathematics or the actual experience of doing this kind of thing. Just ask an Ewok - he’ll tell you.

Movie still from Return of the Jedi. Fair use. (Sorry, Wicket - it’s an important lesson).

It turns out that for many young kids and a surprisingly large fraction of adults, what feels intuitive in terms of vision is that something comes out of your eyes that allows you to see. Winer and Cottrell reported in their 1996 paper that around half of kids of various ages and a third of adults endorsed an extramission theory of vision in various ways. These results depend a good bit on how you present the question though: In particular, graphics depicting rays coming into or out of the eye turned out to negatively impact performance! Among both adults and children (Winer et al., 1996), purely verbal presentations of the question (do rays come in or out of the eye when you see?) led to more frequent intromission responses than graphics presentations did. What exactly is going on here? One possible account of these results is that this depends on adults and kids mixing up mechanisms of vision that are based on physics and physiology with symbolic understanding of the act of seeing. That is, one key aspect of human vision is that we move our eyes around the world to put specific parts of a scene in our fovea, the part of the retina with the best acuity. That willful exploration of a scene with our eyes, the choice to direct our gaze at one part of an image instead of another, may be something that intuitively feels like it ought to be included in our description of what vision is. Whatever the reason for these responses, they turn out to be fairly robust - what I like to call sticky. Beliefs in extramission do tend to decline with age (Cottrell & Winer, 1994), but they don’t drop all that far - one-third of college students is still rather a lot. Even direct educational interventions designed to reinforce intromission reasoning about vision turn out to have a fairly transient effect: Beliefs shift away from eye-beams for a while, but among college students it doesn’t take long for them to revert to their original extramissionist ideas (Gregg et al., 2001).

More recent work has challenged the idea of widespread explicit extramissionist beliefs about vision in adults, however, while also demonstrating in some creative ways that an implicit feeling that something comes out of the eyes is evident in adults’ reasoning. Specifically, Guterstam et al. (2019) assessed observers’ adherence to a extramission-based reasoning in two ways: (1) They just asked ‘em, more or less like the studies I described above did, and (2) they asked participants to reason about a physical task that incorporated a person’s gaze. With regard to (1), the story is fairly simple and maybe a little disappointing if you thought it was neat that adults tended to get vision wrong: Only about 5% of their participants chose extramission options when asked directly about it. The results from their physical reasoning task, however, are exceptionally neat.

Image adapted from Guterstam et al., 2019.

Suppose you have a thin tube sitting upright on a table. If you start to tilt that tube away from vertical, eventually you’ll pass a point of no return and it will tip all the way over. Where is that threshold? By itself, this is a reasoning task that assesses what you know about things like the center-of-mass of an object and how you visually evaluate its position relative to an object’s base of support. If we ask participants to tilt the tube so that it’s right at the point of collapse, we’ll get a sense of how accurate they are at visually thinking through these physical relationships. But what about extramission vision? This is where it gets really fun: When we show people a picture of this tube that they get to adjust, let’s include an image of a person facing the tube either with their eyes open, or their eyes closed. If you’re only reasoning about the tube and the physics of a rigid object, this should just be decoration. On the other hand, if you implicitly think that there is some sort of force or beam coming out of the eyes, maybe, just maybe, you adjust the tube differently when the eyes are open (so that the eye-beam would sort of push the tube back up a little bit) than when they’re closed (so there’s no eye-force to help out). I’m describing this in enough detail that I’m sure you’ve guessed what happened: It does matter when the eyes are open, which suggests people reason about vision at least in part using a model that incorporates gaze as a force that comes out of the eyes! To be fair, this is a little less like Superman and a little more like Cyclops from the X-Men (no matter what comics writer Gail Simone has to say about his eyebeams!), but the point stands: Extramission vision is surprisingly intuitive and sticky. Nothing comes out of our eyes to help us see, but we sort of think it does anyway. When Superman scans the inside of buildings, safes, or cars like he has his own X-ray spotlight, it may be extraterrestrial and Super, but it also probably feels just a bit like it kind of makes sense.

Conclusion

Would it be great to be able to see through stuff at will? Probably. Is it easy to achieve? Absolutely not. Nonetheless, thinking through Superman’s X-ray vision and the things that would constrain it helps us see how our own optical system for forming images and transducing light achieves some great things that support impressive, if not Kryptonian, visual abilities. Further, while Superman shooting X-ray beams out of his eyes saves him (and his writing team) from worrying about all the optical stuff we talked about in the first part of this essay, it also reveals a striking feature of how we reason about our own vision. Extramission theories of vision are an error, but like all folk reasoning about the sciences it’s important to not just dismiss it. Instead, it clues us into persistent modes in our cognition about ourselves that can become their own object of study, or at least inform the way we try and teach others about our vision and how it works.

References

Balas, B. (2022, August 15). The Predator: A Vision Science Perspective. https://doi.org/10.31234/osf.io/gw8vq

Cottrell, J. E., & Winer, G. A. (1994). Development in the understanding of perception: The decline of extramission perception beliefs. Developmental Psychology, 30(2), 218–228. https://doi.org/10.1037/0012-1649.30.2.218

Guterstam, A., Kean, H. H., Webb, T. W., Kean, F. S., & Graziano, M. (2019). Implicit model of other people's visual attention as an invisible, force-carrying beam projecting from the eyes. Proceedings of the National Academy of Sciences of the United States of America, 116(1), 328–333. https://doi.org/10.1073/pnas.1816581115

Pittenger, J.B. (1983) On the plausibility of Superman’s x-ray vision. Perception, 12, 635-639.

Gorini, R. (2003) Al-Hatham the Man of Experience. First Steps in the Science of Vision. JISHIM-Journal of the International Society for the History of Islamic Medicine. 2003; 2 (4): 53-55

Gregg, V. R., Winer, G. A., Cottrell, J. E., Hedman, K. E., & Fournier, J. S. (2001). The persistence of a misconception about vision after educational interventions. Psychonomic bulletin & review, 8(3), 622–626. https://doi.org/10.3758/bf03196199

Orzel, C. (2016) The Optics of Superman’s ‘X-Ray Vision.’

Tippet, B. (2009) A Unified Theory of Superman’s Powers. https://qwantz.com/fanart/superman.pdf

Winer, G. A., & Cottrell, J. E. (1996). Does anything leave the eye when we see? Extramission beliefs of children and adults. Current Directions in Psychological Science, 5(5), 137–142. https://doi.org/10.1111/1467-8721.ep11512346

Winer, G. A., Cottrell, J. E., Karefilaki, K. D., & Gregg, V. R. (1996). Images, words, and questions: variables that influence beliefs about vision in children and adults. Journal of experimental child psychology, 63(3), 499–525. https://doi.org/10.1006/jecp.1996.0060

Wininger K. L. (2013). A Look at Superman's X-ray Vision. Radiologic technology, 84(5), 530–535.