Geordi La Forge’s VISOR and the promise of prosthetic vision

Connecting a 24th-century prosthetic to our 21st-century understanding of human vision.

Introduction

I’ve been a Trekkie for as long as I can remember. I watched the original series in syndication in the basement of our first house and was drawn in by the slow menace of the Tholian Web, the tense encounters with the Romulans, and the sad, sad fate of all those security officers. My family used to go to Star Trek movies on opening night and wade past local cosplayers advertising for Klingon Summer Camp (I never got to go) and tables with vendors selling blueprints for starships and starbases (I never remembered to save up for these). I scored a ton of swag from the premiere of The Search for Spock and remember seeing my Mom cry when they blew up the Enterprise. My brother had the Enterprise bridge playset from ST: TMP, which was complete with action figures that had knees that didn’t bend and a turbolift that was just a trapdoor, sending Decker and the rest down to the Lower Decks no matter what button they may have pushed.* My mom had a long standing regret focused on a wooden model of the NCC-1701 that she saw at a regional craft fair and decided she couldn’t afford, only to kick herself later as she recalled just how cool the thing looked. Years later she’d finally see this vendor again and didn’t let the opportunity pass again - the ship still stands in their living room and it is legitimately awesome. I practiced and practiced getting my fingers to do the Vulcan LLAP gesture until I could do it with either hand, though my right hand still shakes when I do it.

*The pedant in me has to point out that the bridge is at the top of the ship, so the trapdoor turbolift isn’t totally inaccurate. It’s still hilarious though, and my brother and I used to push the button to make them drop followed by a loud AAAAAAHHHHHH.

What I’m saying is that I’m a fan. If it’s trek-related, I’m on board.

Start Trek: TNG is really my Star Trek, though. Oh sure, I watched DS9 and I listen patiently while one of my current gaming buddies makes his biweekly-or-so case that it’s not just the best ST, but maybe one of the best TV series ever. I even stuck with Voyager through all of it’s weirder episodes, including that one where they turn into primordial lizards and the one where Janeway ends up grappling hand-to-hand with an alien virus. TNG was the show my family got together to watch each week, though, in that bygone era when new episodes of your favorite show didn’t show up until 7pm on Thursday night and you had to be there when they did. Everything about it was great. I saved up for my own die-cast NCC-1701-D with a detachable saucer section and bought action figures of the whole cast (pretty sure that the knees still didn’t bend, though). I think the show hit me at just the right age - old enough to understand some of the speculative ideas and philosophical issues raised by different alien encounters, but still little enough that the at-times rocky special effects didn’t seem corny to me. I wrote to most of the cast (getting signed photos back from quite a few), I sent Jonathan Frakes a screenplay for a potential TNG episode (“Good Script.” he signed on the next photo he sent), and generally immersed myself in the world of the late 24th century.

Of all the members of the crew, one stood out in particular for me. Maybe it was because he was the only actor I immediately recognized in the show: If you were a bookworm in the 80’s, Reading Rainbow was essential viewing, after all. Maybe it was because he was an engineer, a profession I was starting to develop an interest in after taking some parent-child electronics classes with my Dad. Whatever it was, I thought Geordi Laforge was awesome. He was smart, he was a hard worker, a good leader, and a great friend - especially to Lt. Commander Data. These were qualities that a lot of the crew had, but Geordi also had something that they didn’t.

He had his VISOR.

The VISOR (or Visual Instrument and Sensory Organ Replacement) is a prosthetic visual aid worn like a pair of glasses that provides a blind user with the ability to sense light. Geordi wears it through the entire run of TNG, and besides giving him the ability to see despite his congenital blindness, it also gives him access to a broader range of visual abilities than anyone else on the ship. I loved this idea - a cybernetic eye that did more than the human eye ever could! What was that even like? How did it work? Could we make something like that someday? The VISOR was an ever-present reminder that we were in a universe where science and technology had made incredible things possible. What was really neat about it to me was something kind of subtle: While it was a fact of Geordi’s life and occasionally gave him necessary information about plot-relevant stuff, mostly his blindness and its treatment were just a part of the Enterprise’s reality. There’s one episode where he’s trapped on a planet without it, making for a harrowing stretch where you’re forced to watch him struggle to survive in its absence, but most of the time it isn’t a big deal. I was too little to have sophisticated ideas about things like representation and diversity, but I did understand that being different was hard. Besides the allure of this intriguing future tech, seeing Geordi be part of the crew week-to-week without people constantly bringing this very conspicuous difference made an impression on me.

Now,, the VISOR intrigues me on a different level. What exactly can Geordi see and do with the aid of this prosthetic device? Given what we see on screen, what does it seem like it must be doing to make visual experience possible? For a show that spawned technical guides about the ships galore, I’ve had a hard time finding much in-universe content about the VISOR. According to Memory Alpha, the earliest known version of the device is seen on board the USS Discovery in the 23rd century (see below), but I haven’t seen any canon material about it’s invention or development elsewhere. That’s just as well I suppose, because I’m not so interested in unpacking official content about the VISOR. Instead I think it offers a neat excuse to talk about some properties of the visual system and the nature of vision restoration in 24th century and the 21st.

Let’s begin by saying a little bit about the man himself: What is the nature of the condition that the VISOR is meant to address? What do we know about Geordi Laforge’s blindness?

Geordi’s blindness

I usually like to stick with working out what we know based on what we see (and we’ll get there!) but it’s useful to start with some material we can find in different ST:TNG scripts. First, Geordi’s blindness is congenital rather than the result of an injury or disease. According to the episode “Hero Worship,” he was first given a VISOR at the age of 5, which is in itself kind of interesting. When we can (as in the case of congenital cataracts), we try to correct visual impairment as early as possible. Restoring sight at later ages raises a real question: To what extent can individuals learn to use their visual sense after accumulating experience without one? The visual cortex of blind individuals does not just sit idle, after all, but ends up contributing to other aspects of sensory processing and cognition (Bedny, 2017). This adaptive remapping of cortical specificity could have consequences for attempts to restore sight late in life - will the visual brain become visual again? Oliver Sacks’ patient Virgil (described in “An Anthropologist on Mars”) provides a complex answer to this question. He undergoes treatment to dramatically improve his vision in adulthood, but ultimately struggles to use his visual sense and still “acts blind” in many settings. Sacks infers from Virgil’s description of his experience that this is largely because he finds auditory and tactile sensation far more interpretible than his new-found vision and thus moves more confidently through the world when he simply ignores what he can see. This is not to say that late treatment of congenital blindness is impossible, though! My PhD supervisor, Dr. Pawan Sinha, has for many years now carried out important humanitarian and scientific work in India that demonstrates the potential of deferred treatment for congenital cataract to restore visual function. Many of the children he works with have had dense, bilateral congenital cataracts for the first 5-10 years of their lives, undergoing treatment through the clinical partnerships he’s established to restore their sight in middle childhood (see image below). What comes next? In many cases, early deficits in how well they understand visual input, including specific differences in how they choose to group and segment different parts of complex images compared to control participants. Sinha describes this is a sort of over-fragmentation of the visual world - local measurements of visual structure can be made, but combining these into larger-scale features doesn’t proceed the same way that it does in the typically developing visual system. This changes over time, however - in his published work, Sinha describes longitudinal results from cataract patients that demonstrate this early, fragmented visual experience does ultimately turn into a visual world that is grouped, segmented, and perceptually organized in a manner more consistent with what controls see (Ostrovsky et al., 2006). This is all to say that the timeline of Geordi’s blindness and the intervention with the VISOR to improve his visual function is consistent with what we know about the treatment of childhood blindness. Even here in the 21st century there is plenty of reason to think that vision could be restored at the age of 5.

Image credit: https://www.sinhalab.mit.edu/

But why is Geordi blind? Maybe that seems like a strange question, but there are multiple ways to be blind and I want to know which of these best captures Geordi’s circumstances. One can be blind because there is some disruption of the optics of the eye, for example. Cataracts are a great example of this - a cloudy lens diffuses incoming light, making it difficult or impossible to resolve patterns of light (though simply detecting the difference between light and darkness is often preserved). What if you simply don’t have a lens at all? Both the cornea and the lens play important roles in focusing incoming light onto the back of the retina, and if you’ve ever had laser surgery to correct your vision, you may have experienced the disconcerting moment when the doctor lifted a flap of your cornea, suddenly giving you a profoundly blurry experience of the world. One can also have retinal damage of many different kinds, too - if the photoreceptors at the back of the eye are damaged, then you have no tools to transduce incoming light into electrical signals that can be sent inwards to the rest of the visual system. Conditions like macular degeneration and retinitis pigmentosa work this way, with selective retinal damage to central vs. peripheral vision respectively. And it doesn’t stop there! You can be cortically blind as well, with intact eyes, an intact retina, but substantial damage to the primary visual cortex (situated in your occipital lobe). In these individuals, incoming light is focused by the eye and transduced by the retina, but lacks a landing site in visual cortex. Without the right destination, it doesn’t matter how much sensory information is being sent on by earlier stages - there’s nothing there to do the cortical work that provides you with a conscious experience of seeing.

The appearance of Geordi’s eyes tells us a little bit about what might be going on insofar as his eyes have a distinct cloudy appearance that suggests something like cataract. This surely can’t be the only thing going on, though. Cataract surgery is straightforward in the present day and I’m confident that Dr. Crusher could easily replace Geordi’s lenses given that she’s practicing medicine 300 years or so from now. That Geordi hasn’t had such a procedure suggests to me that there’s no reason to bother - he must have damage further on in his visual system as well. We get some confirmation for this later in the series during a conversation between Geordi and Dr. Pulaski, who points out that there are frontier surgical procedures that could potentially treat Geordi’s blindness and obviate the need for the VISOR. As she’s describing the treatment (in the TNG episode “Loud as a Whisper”), Dr. Pulaski mentions the potential to regenerate Geordi’s optic nerve, which is the conduit between the retina and the rest of the visual system. No optic nerve, no vision. That Geordi’s optic nerve would need to be regenerated provides us with the information we need: Both his eyes and his optic nerve are atypical, but his visual cortex is most likely intact. This is important to know as we start to consider more about what the VISOR must be doing both as an independent EM sensor and as an interface between the environment and Geordi’s nervous system.

Interfacing the VISOR with the Human Visual System

I think it’s particularly fascinating to consider how the VISOR appears to be transmitting information between the outside environment and the nervous system. Take a look at this image, in which you can see the terminals on Geordi’s temples where the VISOR attaches. These loom large for me in my recollections of TNG - the click that the VISOR made as he positioned it over these nodes seemed extremely cybernetic to me for some reason.

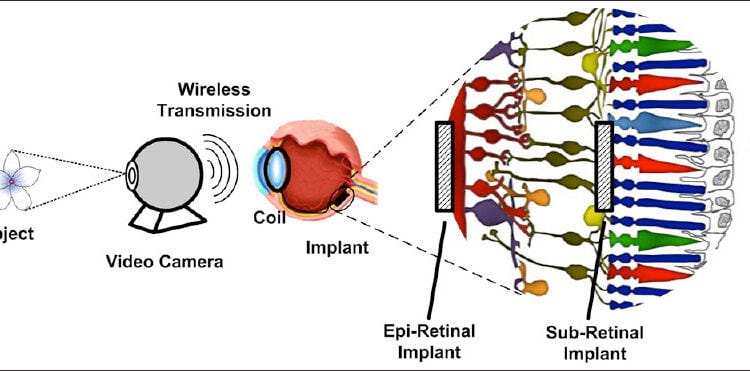

Besides being sort of neat and sci-fi, why do I think these are so interesting, though? Largely because of what they suggest about the way the device must be delivering information about visual patterns to stages of processing beyond Geordi’s damaged optic nerve. Let’s start by thinking about a sort of generic visual prosthetic that’s more like a cybernetic eye than the VISOR seems to be: Specifically, let’s consider what it would be like to just replace a damaged retina with an artificial one using modern technology. One recipe for a visual prosthesis like this is as follows: Use an artificial device to take video of the environment, then relay those measurements to an implant positioned either epi-retinally or sub-retinally (see below). That implant produces the electrical signals that the retina cannot, leading to activity in the cells connected to them downstream. Et Voila! A key difference between this framework and what the VISOR does, though, has to do with Geordi’s damaged optic nerve: The prosthesis I just described steps in at just one crucial step - the transduction of light by the photoreceptors - and produces signals that proceed down the rest of the visual pipeline as usual. The VISOR is replacing quite a few more steps, which leads to some extra work to do and one particularly difficult problem to solve.

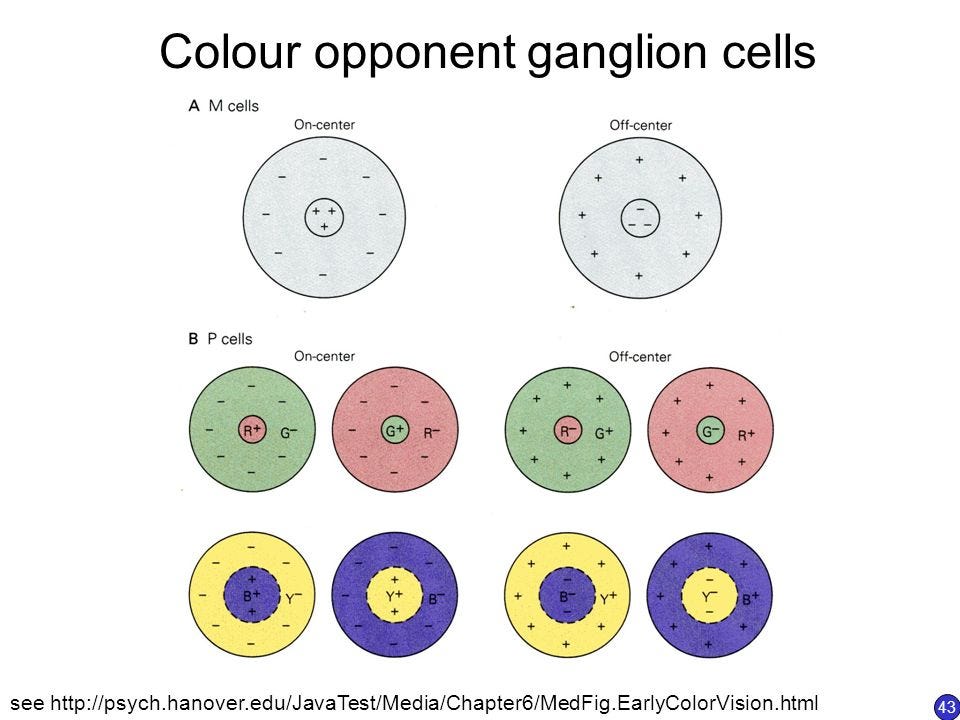

For one, those next steps in visual processing just after the retina are already doing some more interesting stuff than the photoreceptors do themselves. The cells in the retinal ganglion layer are not just sensitive to the intensity and wavelength of light, for example, but also start to provide measurements about patterns of light via receptive fields that incorporate both excitatory and inhibitory connections from preceding cells. To be more specific, retinal ganglion cells tend to be sensitive to local contrast via a center-surround architecture in which light falling in the very center of the receptive field needs to differ from the light falling in a surround region to produce strong signals. Depending on the cell, this makes them sensitive to local increments or decrements of light or sensitive to local changes in chroma. You can see examples of the different kinds of retinal ganglion cell receptive fields in the diagram below - processing that I’m guessing the VISOR may approximate in some fashion given that it isn’t happening in Geordi’s retinal ganglion layer.

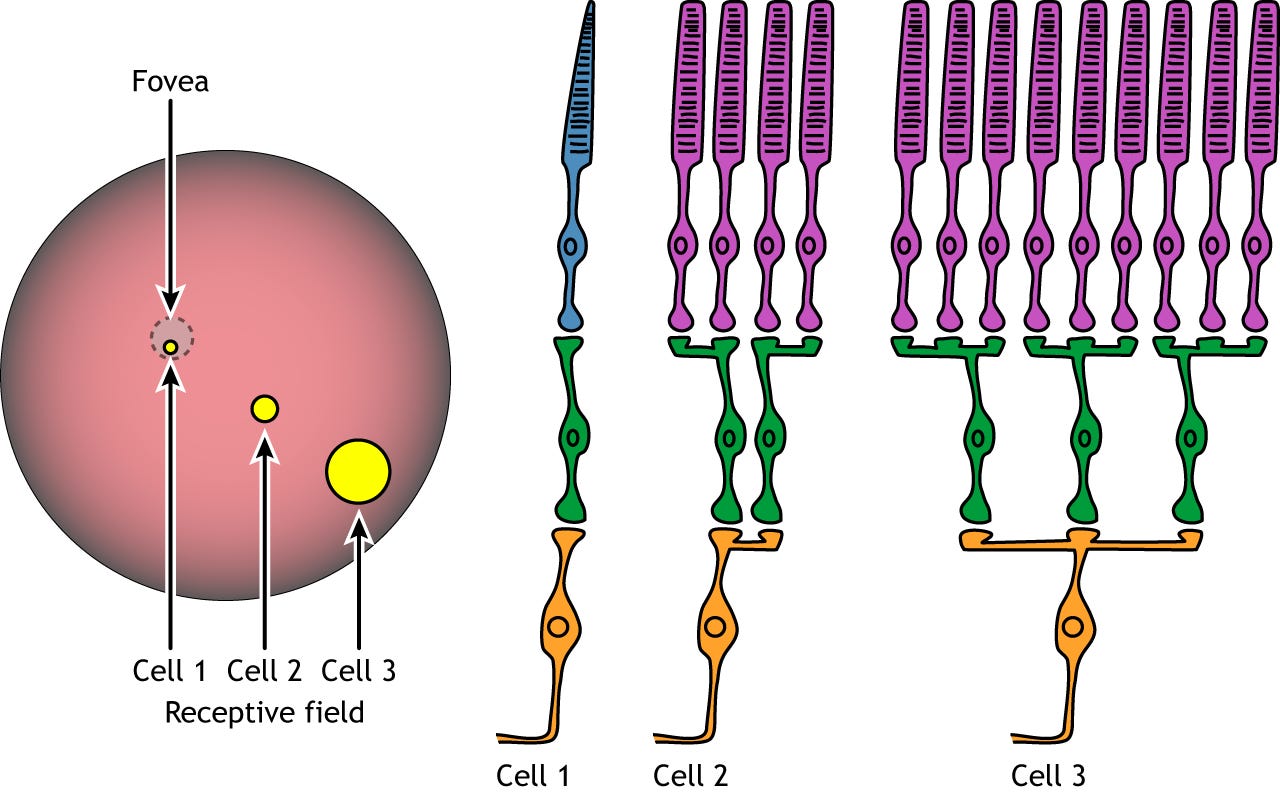

This isn’t the only feature of the retinal ganglion layer for us to think about, however. These more complex receptive fields I just got done describing depend on converging connections between cells in the retina itself and the target cells in the ganglion layer. The amount of convergence between that first stage and the next does a lot to shape your subjective experience across the visual field. In the central part of the retina, convergence is low - a retinal ganglion cell may receive signals from just 1-3 cones that contribute to the central part of its receptive field and a similar number that contribute to the surrounding annulus. That low convergence means that the activity of such a ganglion cell depends on differences between the activity of a few photoreceptors and their neighbors just next door, which means differences in contrast that occur in a small part of the image can make this cell produce activity. When you look at cells in the peripheral parts of the retina however, convergence is higher - the central region of a ganglion cells receptive field may receive contributions from many photoreceptors, and the surrounding annulus will too. That increase in convergence has a benefit - lots of sensors contributing to a single cell’s activity helps increase sensitivity to low contrast. However, it also means that you’ve lost your ability to measure contrast in small regions. Something in that neighborhood covered by that large collection of photoreceptors led to activity in this ganglion cell, but you can’t be more precise about exactly where.

Image Credit: https://openbooks.lib.msu.edu/introneuroscience1/chapter/vision-the-retina/

This is the extra work I was talking about - honestly, though,I don’t think it’s bad at all. These operations, both the center-surround filtering offered by the usual retinal ganglion cell layer and the varying convergence across the visual field are things we can already approximate with image processing operations implemented in languages like MATLAB, Python, or whatever else you like to use (I prefer MATLAB - fight me). I did say there was a problem to work through though and now I think we have to address it head-on. Imagine we’ve got the VISOR up and running and it is happily turning incoming light into electrical signals, and then applying pre-processing steps to approximate what the retinal ganglion layer would be doing if it were intact. Our next step is to send that information along to Geordi’s intact visual pathways, bypassing the optic nerve that we’re assuming isn’t there and heading straight for…where exactly?

Our destination is probably the lateral geniculate nucleus, or LGN. This is a bilateral projection of the thalamus that was named “geniculate” because someone (who I assume was struggling to come up with anything better) decided that it looked like a bent knee. This is true if you look at it in cross-section and haven’t seen a knee for a while. This structure is where the retinal ganglion cells send information via the optic nerve, so I figure it must be where the VISOR is also trying to deliver its measurements. The thing about this crucial step is that we can’t just sort of generically beam “vision” into these little chunks of neural tissue. The LGN has a ton of interesting and important structure to it that we need to respect so that subsequent stages in visual processing have the information they need. To put it more plainly, the VISOR has to make sure that it puts the information that it has in the right places.

Retinotopic organization in the LGN

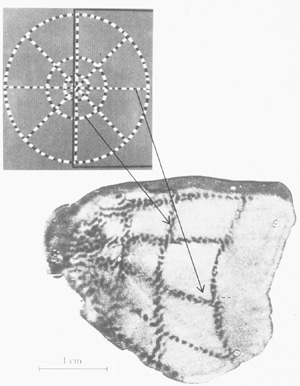

One basic property of the LGN is something called retinotopic mapping. This term refers to the organization of information from different locations of the visual field such that neighboring parts of the image end up being processed by neighboring cells in the visual system. If that sounded a little complicated to you, I think a picture will easily be worth a thousand words here. Take a look at the figure below, which shows you two things: (1) A picture of a sort of checkerboard wheel with alternating light and dark checks arranged into circles and spokes, and (2) a flattened portion of primary visual cortex taken from a non-human primate who had been looking at the wheel. Before showing the wheel to this animal, a radioactive tracer was introduced that would be taken into cells that were producing activity. The idea is that after you present the wheel to the animal’s visual system, you can look at the primary visual cortex afterwards to see how cells in that part of the brain are distributed in comparison to how the wheel took up space across the visual field. What I hope is immediately evident to you is that there is a projection of the image across the surface of the cortex. The wheel isn’t scrambled up - neighboring cells are responding to neighboring parts of the picture.

What this means for Geordi and his VISOR is that the information we have from the prosthesis about distributions of light across the visual field need to be transmitted into the LGN so that this retinotopic map is respected. Measurements from different x,y coordinates in the image have to land at different spots in the LGN so that the image is organized in the manner the visual system expects. This is much easier to do (it seems to me) if you start with an implant that is laid out across the retinal surface like the one I described up above: The retinal ganglion cells you talk to and the connections you use via the optic nerve all just have this retinotopic organization baked in. The VISOR needs to basically send different measurements to different depths in the LGN, which probably meant some kind of extensive mapping of Geordi’s specific LGN structure before the device was going to be fitted. But this is just one feature of the LGN that we need to be careful about as we transmit VISOR measurements inwards - there’s a more going on than just retinotopy!

Ocular organization of LGN layers

Besides being (arguably) shaped like a bent knee, there is another aspect of the LGN’s structure that is evident as soon as you look at it: The thing has layers. Take a look at the cross-section below to see what I mean.

Those purple bands that you see stacked up on one another in the image to the right are the layers that I’m talking about. When we talk about preserving retinotopy by sending signals from the VISOR to the LGN, what I mean is that the measurements from different parts of the visual field need to go to the right place in each of those crescents so that we maintain that smooth projection of the visual field onto this stage of processing. That’s what’s happening as you move around within a layer: cells are getting information from different parts of the image and that’s changing in a systematic, retinotopic way. But what’s happening as you move across layers?

One important thing that’s happening has to do with how the retinae make connections to the LGN. Yup, that’s not a typo: retinae. See, so far I haven’t brought this up, but you don’t just have one retina - you have two of those bad boys. This is a very useful property for your visual system to have because (among other things) it makes it possible for you use retinal disparity (the difference in where the same feature in an image appears on the left and right retina) to make inferences about depth. The thing is, it doesn’t do you any good unless you have some means of sort of marking or storing which eye each measurement came from. This is one of the things that this layered structure in the LGN allows us to do by separating information from the left and right eyes anatomically across the different layers. Each layer of the LGN receives information from either the left eye or the right eye, giving you independent retinotopic maps of your left-eye view of the world and your right-eye view of the world. The VISOR probably needs to be careful about transmitting information to respect this organization, too, or Geordi may have difficulty using information about disparity and other differences between left-eye and right-eye vision to make inferences about the visual environment.

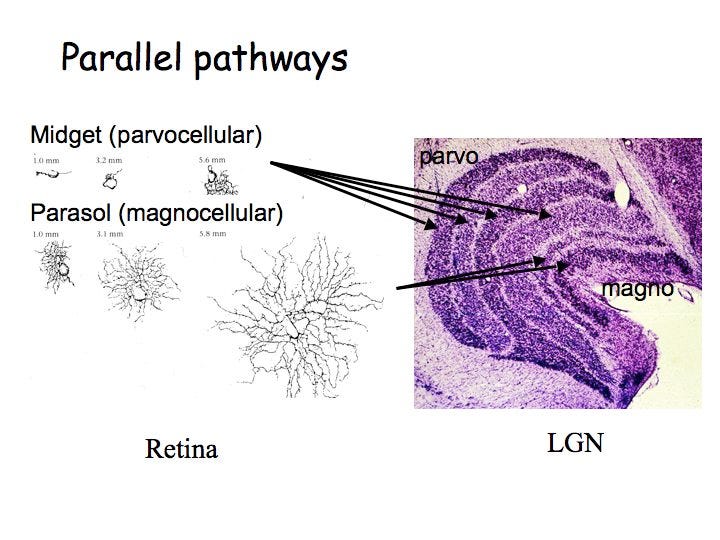

There is another difference we can see across the LGN layers, but to catch on to this organizational principle we have to get an even closer view of the thing - close enough to actually see the cells that make up these different stripes of visual processing. If we were to do so, we would see that cells in the bottom two layers of the LGN are systematically larger than cells at the same position in the top four layers. Those layers with the larger cells are referred to as the magnocellular layers (magno- meaning “big” in Latin) and the layers with the smaller cells are referred to as the parvocellular layers (parvo- apparently meaning “small” in Latin, but who knows that?). To get us away from Latin prefixes (and cross up our P’s and M’s for no reason). we also sometimes just refer to the large cells as parasol cells to reflect the way they spread out over a wider area compared to the smaller midget cells. This difference in size reflects a difference in convergence similar to the one we talked about in the retinal ganglion layer: Parasol cells receive information from many cells across a wider swath the visual field, which tends to make them more sensitive to small amounts of light, but less able to encode small-scale variations in the image. Midget cells receive information from fewer cells that cover less of the image, making them better able to measure variation at fine scales. Again, all of this is stuff the VISOR probably needs to respect by wirelessly beaming data into the nervous system as a sort of carefully structured 3D object.

There is still more we could say about the LGN that I’m glossing over, but I’m going to stop here. Maybe this seemed like excessive detail about a sort of basic step in how the VISOR works (we get the information in) but I think it’s important both to show off the cool architecture of early stages of visual processing in the brain and to highlight the hard technical problems you have to solve when you’re trying to combine artificial and biological systems. I’d be remiss if I didn’t mention that there are current approaches to prosthetic vision that try to take advantage of what we know about retinotopy, etc. to deliver a meaningful visual experience, however! Instead of that diagram I referred to above for a candidate visual prosthesis, what if we put an implant in primary visual cortex itself (or the LGN) instead of the retina? That way we could make sure we matched up information from our sensors with the retinotopic map in the area where the implant goes. It’s not like this makes everything easy, but it means understanding this aspect of the visual system’s architecture is critically important no matter what we’d want to do.

What does the VISOR measure?

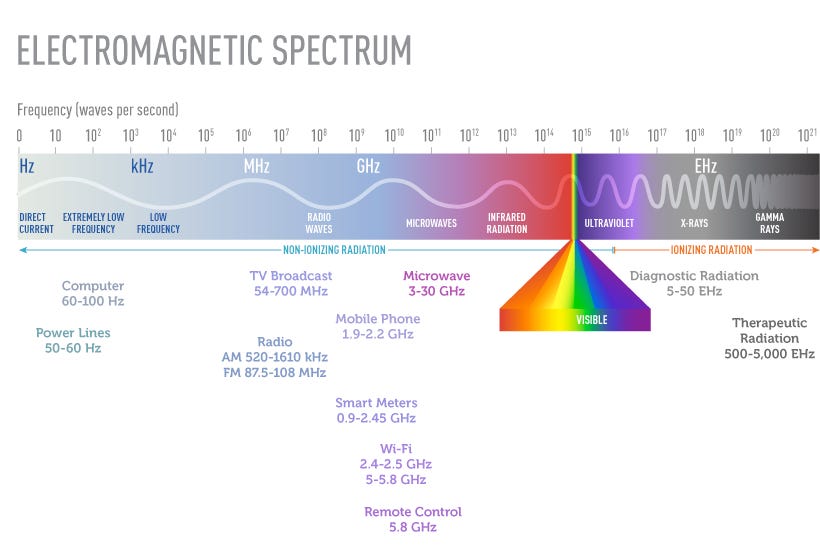

Now onto the stuff I love the most about topics like this - what does Geordi see when he uses the VISOR? What does this tell us about what it does and how his visual system has adapted to use this artificial sensor? Let’s go back to Memory Alpha for some of the few technical deets we have available regarding the VISOR: Apparently it is capable of measuring light across a much wider range of wavelengths than the human retina does. Specifically, the VISOR can measure EM radiation with frequencies between 1 Hz and 100,000 THz That last unit is terahertz by the way, where the ‘tera’ prefix means 1012. That’s…kind of a LOT (but hold that thought). For context the typical human retina measures light with wavelengths between about 400nm - 700nm, which works out to frequencies between 4 and 8 x 1014 Hz or so. In the diagram below, I’ve got the full EM spectrum with the little sliver of visible light that we can sense with the retina and you can sort of see get a sense of the much wider swath of light that Geordi has access to with the VISOR.

Image credit: https://www.cancer.org/cancer/risk-prevention/radiation-exposure/x-rays-gamma-rays/what-are-xrays-and-gamma-rays.html

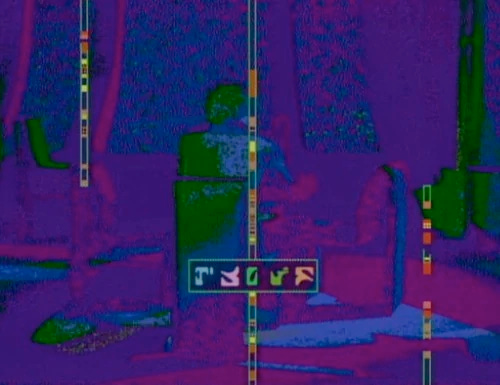

What does this mean in terms of what Geordi can see? The first thing to say about his visual experience is that it’s quite variable across episodes and across different environments. For example, in the images below you can see what Lt. Commander Data looks like to Geordi in the episode “Heart of Glory” and a different shot showing us his view of the bridge of a non-Federation vessel. The image of Data isn’t so different from our experience: He has a bit of a halo and maybe some deeper shadows than we might expect, but mostly this seems like the VISOR is capturing something akin to our subjective experience. The second image is quite different, though: It looks like there are possibly some contrast-reversals in the image (places where light and dark are flipped from what we would expect) and also some enhancement of object edges. The color palette is also not typical of natural scenes, even if we’re thinking about the usual distribution of chroma onboard a starship. Finally, there are some hints of transparency where we wouldn’t usually expect it - the beams at right and the items just in front of the open hatchway on the left both seem a little bit see-through. What’s going on in these different views?

I think this means a number of interesting things. First, the more or less typical view of Data means that in some circumstances Geordi is working with roughly the same sliver of visible light that most of use. However, the second image clearly suggests he can do much more than that to take advantage of the VISOR’s wider range of sensors. The difference between these images suggests to me that the VISOR permits the user to bandpass incoming light and choose the range of wavelengths that are measured. By itself, that is a neat trick because it requires a great deal of flexibility on the observer’s part: Picard mentions at one point that he can’t make much out in Geordi’s display, highlighting the fact that Geordi has had to engage in a lot of long-term perceptual learning. Geordi acknowledges this point and an unspoken issue related to all of this is that while the crew on the bridge gets direct access to the VISOR in this episode, they don’t have access to the perceptual work that Geordi’s visual system must be doing in subsequent stages to process this input and yield percepts. But what does this mean for the scope of Geordi’s subjective experience? It’s an interesting question, for example, whether or not Geordi has color experiences different than or beyond those of the rest of the crew, or different subjective experiences of properties like motion, materials or transparency. My initial guess would be that most of his color experiences (for example) are going to be like ours but with a shifted and/or rearranged “color map,” but I also think it’s not entirely out of the realm to speculate that his exposure to a much broader range of wavelengths may also have fundamentally changed some the inferential processes that his visual system carries out. Anyone looking at the output of his VISOR alone doesn’t have access to any of those computations, though, so while you can try to spy on people by hacking the device the way the Duras sisters did in Star Trek: Generations, you may find that it’s much more difficult to understand what you’re seeing than you thought. At the very least, it seems like Geordi’s visual system shouldn’t necessarily apply the same computations to light in our visual range as it does to light in some of those other bands - the rules about what different kinds of junctions and such actually imply about the occluding surfaces and objects around you may be a little different when you’re playing around with wavelength ranges as big as these.

This raises an important question about the VISOR’s measurement range and Geordi’s visual experience that has to do with visual ecology. How much stuff is there to even see in the 100,000 THz range? What about the 1Hz range? Having the sensors is grand, but only helps if you have anything to measure with them after all. Given what’s in the environment, what should we expect the world to look like at 10Hz or 100,000 THz?

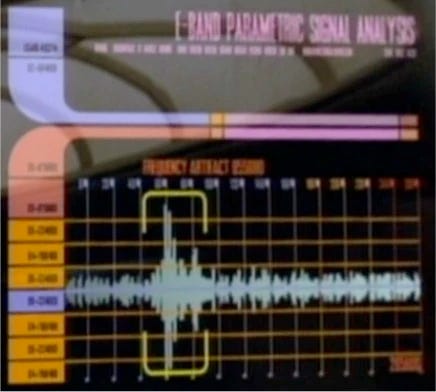

If you take a look at the EM spectrum I included above, we can start thinking through this a little bit. First of all, while I was initially very impressed with that 100,000 THz number I mentioned before (Tera-anything sounds awesome, right?) you’ll note that that 1017 exponent only takes us a little ways past ultraviolet light and into the X-ray range. That’s still cool, but not quite as exotic (to me at least) as gamma rays or the stuff further down the line. What that upper bound means in terms of visual ecology, though, is that Geordi can use the VISOR to see natural sources of X-rays like radon gas and other radioactive substances. What I’m actually more intrigued by is the lower bound on the VISOR’s measurements - that 1 Hz number might be even cooler in some ways than the high-energy stuff happing in the ultraviolet band and above. I say this because that low range of the EM spectrum includes what we usually call ELF (extremely low frequency) radiation, which is generally produced by electrical activity. All your home appliances, for example, are producing EM energy in the tens of Hz range. Other sources of electricity (including lightning) also produce these signals, but my guess is that Geordi gets a ton of mileage out of examining man-made ELF sources with his VISOR in engineering. Not only can he see these wavelengths in the first place, I think we can safely assume that he can see them in different colors, making a visual inspection of a complex electronic device possibly more akin to someone else getting out a meter to take pointwise measurements of what’s happening across a circuit. Besides those ELF signals, Geordi can also apparently see radio waves, microwaves, and infrared radiation. In terms of what’s actually going to be around in a typical environment, mostly this probably buys him some richer information about bodies that are emitting heat and other electrical phenomena. This is also the range that gets him into trouble in the episode “The Mind’s Eye” in which the Romulans use E-band transmission (the actual name for the 60 - 90Ghz frequency range) to influence Geordi’s behavior.

Visualizing E-band pulses on the Enterprise bridge from “The Mind’s Eye.”

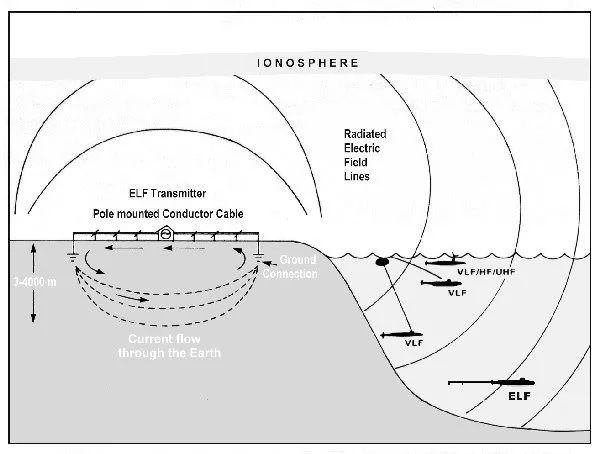

The thing about this low bound to the VISOR’s capabilities that’s weird to me, however, is that we have to really buy into some amazing 24th century tech to pull this feat off. Here in the 21st century, we’re stuck measuring ELF signals with very large antennas. See, in general antennas have to be built at the approximate scale of the wavelengths we’re trying to detect: If you want to detect waves that have wavelengths in the meters, then you’ll need an antenna that’s at least at centimeter or decimeter scale. The wavelengths associated with ELF signals are so large (tens or hundreds of miles) that the US Navy once figured that they could maybe make Wisconsin into a large enough antenna to send very low-frequency signals to submarines. No joke - check out the figure below.

I love the fact that Geordi can see all these neat things that are happening in the range that corresponds to the devices we build to manipulate electricity - totally perfect for an engineer! What I do want to know though, is how the man does it with a sensor that’s only the size of his face instead of the size of our 30th state. It’s not the most glamorous question, sure, but it might actually be the most technically impressive feat that the VISOR is pulling off.

Why does using the VISOR hurt?

Now to consider a different aspect of Geordi’s experience using the VISOR that doesn’t have as much to do with how he sees, but a lot to do with how he feels. Using the VISOR hurts. We see evidence of this many times throughout the series and Geordi discusses the possible use of painkillers and a surgical intervention to permanently alleviate these sensations with Dr. Crusher, only to refuse both for fear that it would compromise the VISOR’s effectiveness. Memory Alpha tells us that the VISOR incorporates a few preprocessing steps to minimize the amount of sensory information being sent along to the visual system in an attempt to prevent “overload,” which is presumably partly to blame for Geordi’s pain. Those steps are kind of interesting to me because they’re real signal processing strategies: delta-compression probably refers to describing the VISOR’s signals with difference encoding rather than the raw values and pulse compression is a technique used in radar signalling. Still, the way the information is compressed is ancillary to the big question I have: Why should there be pain associated with the information being sent in via the VISOR?

I’ll start by thinking through what “overload” might mean in terms of neural stimulation. To start with the basics that we’ve established, the VISOR probably works by sending signals into the LGN, signals that I assume stimulate cells there in accordance with the visual features measured by the sensors in the VISOR and pre-processed by its approximation of the retinal ganglion layer. I’m bringing this up because I think it means there are only a few things the VISOR can end up doing that lead to “more information” than the visual system usually deals with.

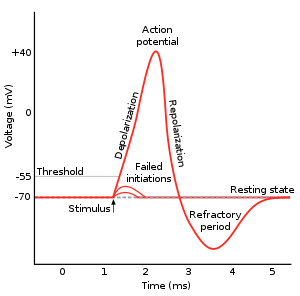

My first take on what “more information” could mean is that the VISOR may end up stimulating the same cells to fire more frequently than would usually occur. There are some limits to this, however, that I suspect constrain just how different this could be in Geordi’s LGN compared to ours. For one, after a neuron produces an action potential (a “spike” if you prefer), there is a refractive period that follows - an interval of time during which the cell cannot produce another spike. This interval lasts for about a millisecond and is partly the result of the hyperpolarization of the cell membrane following a spike (see the little dip in voltage on the right-hand side of this plot of an action potential) - basically, the mechanisms that end up terminating the action potential lead to a sort of inertia in the cell’s potential. This means that while you could make this cell produce another spike, you’d need to work even harder to do it than you did the last time. While I suppose this could mean the VISOR keeps supplying that extra nudge to keep cells firing faster than usual, this also seems like something they could probably adjust about the transmission of information inward to stimulate the LGN. Besides, even if you do force cells to produce action potentials again and again, those cells will tend to habituate or decrease their rate of response. The VISOR can put the pedal on the accelerator, but eventually the cells in the LGN will stop responding as robustly. My suggestion then is that this is unlikely to be the key factor in explaining why Geordi experiences the pain that he does.

But then what could it be? One idea I had has to do with something that may be different about Geordi’s neural responses to images due to what the VISOR measures about natural scenes: I wonder if sparsity differs depending on the wavelengths he’s choosing to work with.

Let’s say that you’re trying to come up with a way to measure what you see in natural scenes and you’re going to use neurons to do it. You have some decisions to make, especially with regard to what kinds of patterns of light are going to elicit activity from your cells: Remember that those retinal ganglion cells (and it turns out, their close cousins in the LGN) would respond when patterns with the right center-surround organization landed in their receptive field. What are good patterns to measure for your hypothetical visual system? More importantly, what do we mean by good?

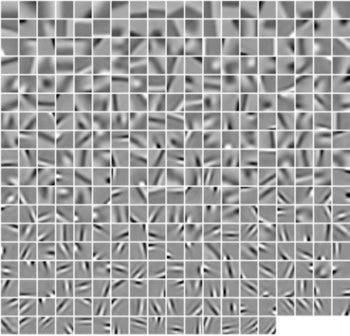

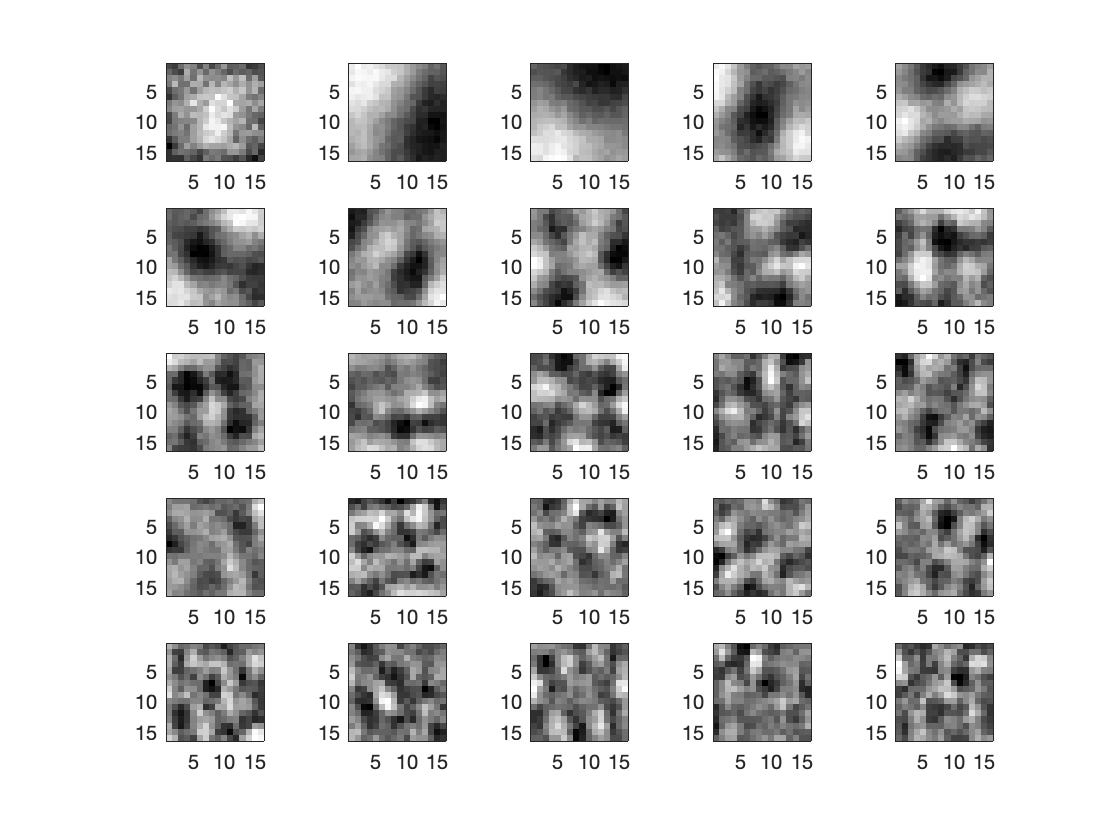

One way to be specific about that second question is to say that we’d like to choose what our cells measure based on one key constraint - we’d like to ensure that on average, for the kinds of images we expect to see, only a fraction of our cells will be active. That kind of constraint is called a sparsity constraint because it is meant to arrange a situation where a small (or sparse) collection of cells are sending signals about the environment at any given moment. There are a bunch of reasons why this is a good idea, especially if we aim for a fraction that’s small, but not too small. Some of these reasons have to do with our ability to avoid errors in measuring what we see in the event that we lose some cells (a problem with a strategy that’s especially sparse, or local) and some of which have to do with avoiding difficulties in working backward to what neural activity implies about what we were seeing (a problem with dense codes where many neurons are active). In the case of our own visual system, a key result in visual neuroscience is the agreement between the kinds of patterns cells in our primary visual cortex tend to be sensitive to and the kinds of patterns they should be measuring to achieve a sparse code for natural images. You can see what these patterns are like in the figure below: edge-like and bar-like structures of different sizes and tilted in different directions.

Here’s the thing: If our visual world was different, the measurements that lead to a sparse code for natural scenes might be different, too. Oriented lines and edges wouldn’t cut it, but something else would. I think this matters because Geordi’s visual world is different - at least sometimes! This might mean that under some choices of wavelengths he’s going to measure with the VISOR, the statistics of the scenes he views are sufficiently different to lead his visual system to respond densely instead of sparsely - he may have a much larger fraction of cells active than you or I would when viewing the same scene. But why should this have anything to do with pain? Another reason to prefer a sparse code for neural coding is metabolic - neuron activity depends on energy resources, so a sparse code helps you use up that energy efficiently. Suddenly receiving sensory stimuli that elicit activity from a large fraction of your cells probably means suddenly chewing up a lot of those resources in an atypical way. Could that lead to some form of sensory pain? To be honest, I don’t know for sure, but I think it’s an interesting point to consider, especially given that the image statistics Geordi experiences may change dramatically depending on what wavelength bands he’s working with and what kind of environment he’s in. This may mean he both can’t learn a generally sparse neural code and that he routinely ends up with dense responses as he shifts his VISOR’s sensitivity around.

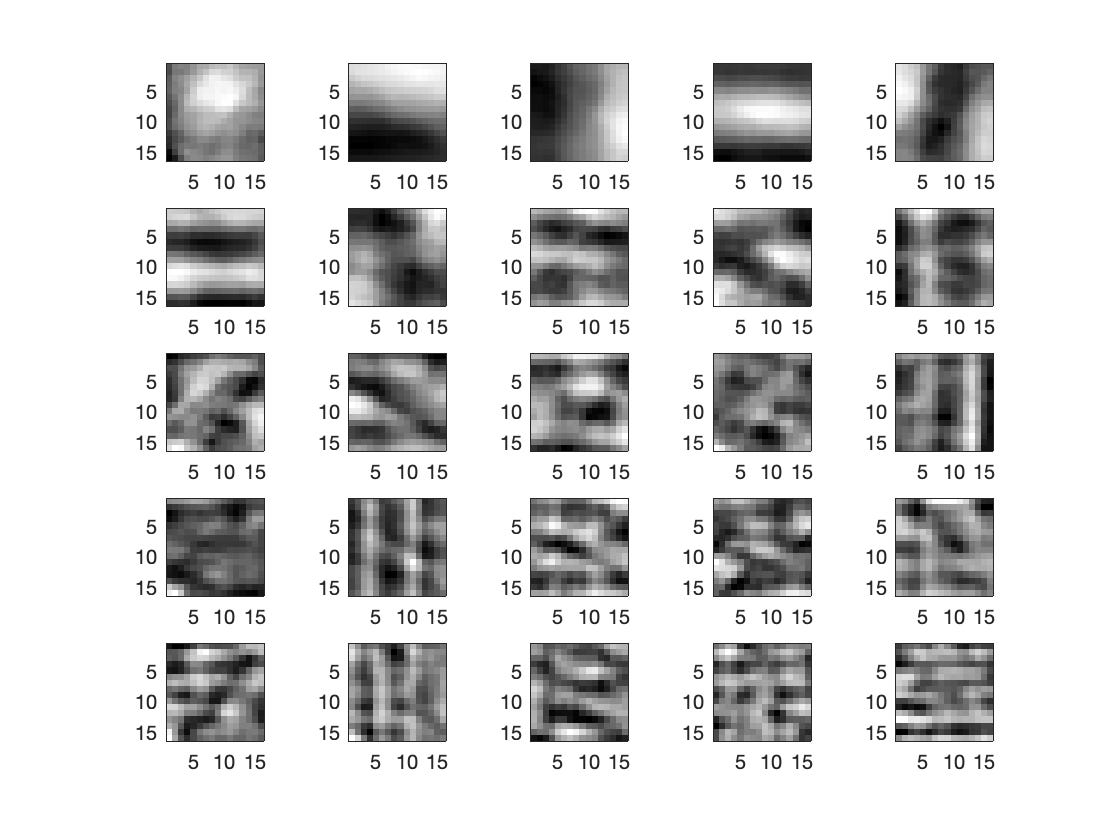

Can we tell if this is actually the case? This isn’t exactly the most rigorous thing in the world to do, but I used some crude computational tools to take a look at the nature of a sparse code we might recover for typical images in a Starfleet officer’s environment (pictures of the Enterprise’s bridge) and compare that to the sparse code we recover for images the way they appear through the VISOR. First, take a look at the receptive fields we get when we use typical images of the bridge as our natural environment.

Compare these to the mosaic of receptive fields above and you’ll see some similarities: tilted lines, tilted edges and a mix of thicker structures and skinnier patterns. Not bad for a few minutes messing with MATLAB after work and indicative of the variation we see across different cells in human primary visual cortex: Different cells are sensitive to patterns of light with different orientations of contrast and different spatial frequencies of contrast (wide or skinny stripes). So that’s what a quick-and-dirty Principal Components Analysis buys you in terms of a sparse code for natural scenes viewed without a prosthesis - does the game change when we use Geordi’s VISOR POV images instead?

What do we think? I, for one, think it’s a little different - maybe different enough to mean that this set of images might not lead to sparse activity if we process them with the first batch of cells. There are some commonalities to be sure - we still see some large-scale oriented edges in the top row, for example. But shortly after that in raster order, it starts to get a little grainy and also a little…well, blobby or something. I don’t want to read too much into this except to say that I think this maybe reinforces my idea that Geordi’s diverse range of “natural scenes” may mess with some principles of neural coding.

Is this the final world on the pain Geordi manages while using the VISOR? Surely not, but I think it offers a neat excuse for talking about the principles that we think might shape the patterns we measure with cells in our visual system, and how changes in what’s in the environment may affect the way we process natural scenes.

Prosthetic vision in the 21st century and wearing a VISOR in the 20th century

I’ll wrap this up with one last point about Geordi’s experience that I think is interesting to consider relative to what we know about the typical human visual system (and 21st century prosthetic vision). Actually, this isn’t so much about Geordi’s experience as it is about Levar Burton’s. Consider the following quote from Mr. Burton about the realities of wearing the VISOR on-set:

"People always ask me, 'Could you see out of that thing?' And the answer is, no, I couldn't. It was always very funny to me because when the actor puts the visor on 85 to 90 percent of my vision was taken away, yet I'm playing a guy who sees more than everyone else around him. So that's just God's cruel little joke."

That’s right - Geordi is a fictional character with congenital blindness that is dramatically reversed by the VISOR, but the very real LeVar Burton had to deal with serious challenges on the set due to the VISOR prop.

There are two things about this that I think are interesting. First, the typical human visual system does a solid job of yielding coherent percepts even when it needs to do a lot of interpolation, or using the information you have to make guesses about missing data. There are so many wonderful examples of this that it’s hard to choose just one, but I picked the image below (from Dr. Peter Tse) because I think it does a great job of highlighting the richness of the experiences your visual system can yield from fairly limited data: Just a few small contours, curved and aligned the right way at the base of some black triangles is enough for a 3D object to emerge. While it isn’t quite the same as having to look at the world through the dense vertical slats of the VISOR prop, it still shows off your visual system’s capacity to reach beyond the raw data and inform you about objects, surfaces, and textures. LeVar Burton was relying on this ability a ton given the occluded view of the world he had to deal with while wearing the VISOR and his performance should convince you that your visual brain does a marvelous job filling in gaps in your measurements of a scene.

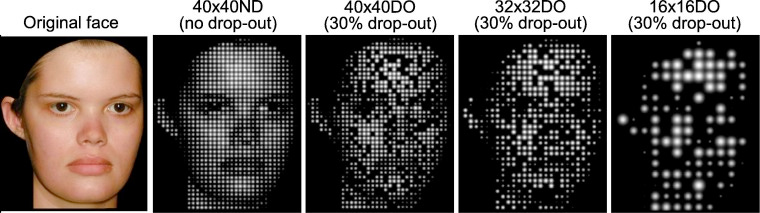

The second thing that I think is interesting about Levar Burton’s experience wearing the VISOR is that it inadvertently aligns with the realities of prosthetic vision in the present day. While the VISOR appears to incorporate a very dense array of sensors and signalling that supports transmission of those measurements with high resolution preserved, modern visual prostheses are still pretty far from that outcome. While there have been some dramatic improvements in the hardware used to stimulate retinal cells via prosthetic implants, current state-of-the-art devices generally provide visual experience that is coarser than typical vision and subject to phenomena like sensor drop-out. Below you can see approximations of what variation in these parameters might look like from Irons et al. (2017). In this study, the goal was to examine how face recognition might be impacted by reductions in image quality associated with a visual prosthesis, and as you can see, a little bit of degradation is one thing, but too much leaves your visual system without enough data to fill in the gaps. Like LeVar Burton (but unlike Geordi!) a current user of a visual prosthesis probably has to a lot of interpolation to cope with the limits imposed on what they can see.

Image credit: Irons et al., 2017, Vision Research

To me, this is a compelling place to stop for now because I feel like it gives me a perspective on what I see in my favorite episodes of TNG that’s especially compelling. This last little connection between the experience of wearing a modern visual prosthesis and the sensory demands placed on one of my favorite actors from childhood make watching Geordi a different experience now than it was when I was a kid. Specifically, it bifurcates what’s happening on screen in a fascinating way. It makes me feel like I’m watching co-existing parallel worlds where Geordi La Forge is the master of all he sees in engineering thanks to the VISOR, and next to him in the multiverse is LeVar Burton, expertly navigating the visual impairment imposed by a prop to make us believe in the future.

References

Bedny M. (2017). Evidence from Blindness for a Cognitively Pluripotent Cortex. Trends in cognitive sciences, 21(9), 637–648. https://doi.org/10.1016/j.tics.2017.06.003

Irons, J. L., Gradden, T., Zhang, A., He, X., Barnes, N., Scott, A. F., & McKone, E. (2017). Face identity recognition in simulated prosthetic vision is poorer than previously reported and can be improved by caricaturing. Vision research, 137, 61–79. https://doi.org/10.1016/j.visres.2017.06.002

Ostrovsky, Y., Andalman, A., & Sinha, P. (2006). Vision following extended congenital blindness. Psychological science, 17(12), 1009–1014. https://doi.org/10.1111/j.1467-9280.2006.01827.x

Sacks, O. (1995) “To see and not see”, An Anthropologist on Mars. Alfred A. Knopf, New York, NY, USA.